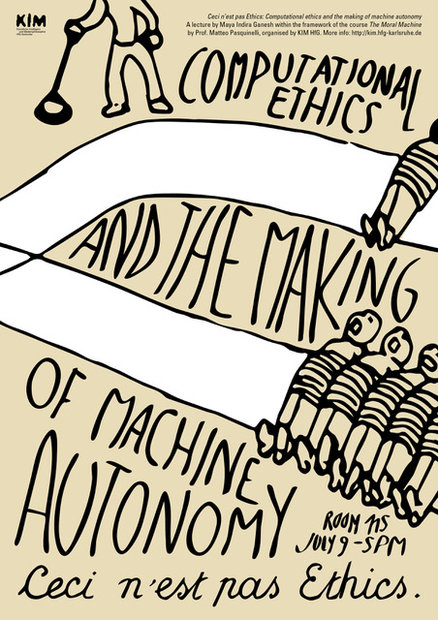

The research group KIM (Artificial Intelligence and Media Philosophy) organized the lecture "Ceci n'est pas Ethics: Computational ethics and the making of machine autonomy" by Maya Indira Ganesh.

We are told that a ‘fully’ autonomous vehicle will have the capacity to make an astonishing range of decisions, from the banal to the complex, as it navigates the world. It will be able to recognise a cyclist, distinguish a dog from a ball, and follow traffic rules. It also has to figure out what to do when something unexpected happens, like a child suddenly dashing across the street chasing her dog that was chasing a cyclist. Who or what should the autonomous vehicle avoid hitting – dog, ball, cyclist or child? What if the cyclist is also a child? This scenario mirrors the conundrum generated by the thought experiment, the Trolley Problem, which has become a popular framework for the ‘ethics of autonomous driving’.

Maya Indira Ganesh is a new research associate at KIM and HfG Karlsruhe in the new project “AI and the Society of the Future” that is funded by Volkswagen Stiftung.

The lecture takes place in the framework of the course "The Moral Machine: On the Automation of Ethics" by Matteo Pasquinelli. The course studies the political implications of Artificial Intelligence systems that are applied in different sectors of the economy and society to automate not only simple tasks of perception but, increasingly, also complex ethical decisions.

More information: kim.hfg-karlsruhe.de

Diesen Beitrag Teilen auf

{"/en/aktuelles/": {"style": "aktuelles", "label": "News", "children": {"container_attrs": "data-ng-cloak data-hfg-news-filter", "style": "blackout", "/en/aktuelles/": {"style": "news", "label": "All"}, "/en/aktuelles/ausstellung": {"style": "exhibition", "label": "Exhibition"}, "/en/aktuelles/projekt": {"style": "project", "label": "Project"}, "/en/aktuelles/veranstaltung": {"style": "event", "label": "Event"}, "/en/aktuelles/kooperation": {"style": "cooperation", "label": "Cooperation"}, "/en/aktuelles/meldung": {"style": "announcement", "label": "Announcement"}, "/en/aktuelles/offene-stellen": {"style": "job-vacancies", "label": "Job vacancies"}, "/en/aktuelles/aktuelle-publikationen": {"style": "new-publications", "label": "New publications"}}}, "/en/hochschule/": {"label": "About us", "children": {"/en/hochschule/mission/": {"label": "Mission statement", "hidden": "true"}, "/en/hochschule/geschichte/": {"label": "History"}, "/en/hochschule/lehre/": {"label": "Teaching"}, "/en/hochschule/organe-und-gremien/": {"label": "Boards and committees", "children": {"/en/hochschule/organe-und-gremien/rektorat/": {"label": "Rectorate"}, "/en/hochschule/organe-und-gremien/senat/": {"label": "Senate"}, "/en/hochschule/organe-und-gremien/hochschulrat/": {"label": "University Council"}, "/en/hochschule/organe-und-gremien/gleichstellungsbeauftragte/": {"label": "Gender Equality Representative"}, "/en/hochschule/organe-und-gremien/behindertenbeauftragter/": {"label": "Representative for the Disabled"}, "/en/hochschule/organe-und-gremien/personalrat/": {"label": "Staff Council"}, "/en/hochschule/organe-und-gremien/asta/": {"label": "Students\u2019 Union"}, "/en/hochschule/organe-und-gremien/fachgruppensprecherinnen/": {"label": "Department Spokespersons"}}}, "/en/hochschule/verwaltung/": {"label": "Administration"}, "/en/hochschule/bekanntmachungen-des-rektorats/": {"label": "Announcements from the rectorate"}, "/en/hochschule/kooperationen/": {"label": "Cooperations"}, "/en/hochschule/zentrale-einrichtungen/": {"label": "Central facilities", "children": {"/en/hochschule/zentrale-einrichtungen/bibliothek": {"label": "Library"}, "/en/hochschule/zentrale-einrichtungen/studios-und-werkstaetten": {"label": "Studios and workshops"}, "/en/hochschule/zentrale-einrichtungen/ausleihe": {"label": "Loans"}}}, "/en/hochschule/foerdergesellschaft/": {"label": "Development fund"}, "/en/hochschule/presse-oeffentlichkeitsarbeit/": {"label": "Press / Public relations"}, "/en/hochschule/publikationen/": {"label": "Publications", "children": {"/en/hochschule/publikationen/jahresberichte/": {"label": "Annual Reports"}, "/en/hochschule/publikationen/informationsbroschueren/": {"label": "Information brochures"}, "/en/hochschule/publikationen/munitionsfabrik/": {"label": "Munitionsfabrik"}, "/en/hochschule/publikationen/edition-76135/": {"label": "Edition 76135"}, "/en/hochschule/publikationen/neue-folge/": {"label": "Neue Folge"}, "/en/hochschule/publikationen/reihe-hfg-forschung/": {"label": "Reihe HfG Forschung"}, "/en/hochschule/publikationen/massnahme/": {"label": "Ma\u00dfnahme"}, "/en/hochschule/publikationen/schriftenreihe-der-hfg-bei-cantz/": {"label": "cantz book series"}, "/en/hochschule/publikationen/weitere-publikationen/": {"label": "Various publications"}}}, "/en/hochschule/downloads/": {"label": "Downloads"}, "/en/hochschule/informationen-fuer-hochschulangehoerige/": {"label": "Information for University Staff and Students"}, "/en/hochschule/stellen/": {"label": "Job vacancies"}}}, "/en/studium/": {"label": "Studies", "children": {"/en/studium/fachgruppen-und-studiengange/": {"label": "Departments and courses of study", "children": {"/en/studium/fachgruppen-und-studiengange/ausstellungsdesign-und-szenografie/": {"label": "Exhibition Design and Scenography"}, "/en/studium/fachgruppen-und-studiengange/kommunikationsdesign/": {"label": "Communication Design"}, "/en/studium/fachgruppen-und-studiengange/produktdesign/": {"label": "Product Design"}, "/en/studium/fachgruppen-und-studiengange/kunstwissenschaft-und-medienphilosophie/": {"label": "Art Research and Media Philosophy"}, "/en/studium/fachgruppen-und-studiengange/medienkunst/": {"label": "Media Art"}}}, "/en/studium/studieninteressierte/": {"label": "Prospective Students"}, "/en/studium/bewerbung/": {"label": "Application", "children": {"/en/studium/bewerbung/studienberatung-und-mappenberatung/": {"label": "Study and portfolio advice"}, "/en/studium/bewerbung/bewerbungsverfahren/": {"label": "Application procedure"}, "/en/studium/bewerbung/zulassung-und-eignungspruefung/": {"label": "Admission requirements and aptitude examination"}, "/en/studium/bewerbung/immatrikulation/": {"label": "Enrollment"}, "/en/studium/bewerbung/gasthoererinnen/": {"label": "Auditors", "children": {"/studium/bewerbung/gasthoererinnen/gasthoererinnen/": {"label": "Auditors"}, "/studium/bewerbung/gasthoererinnen/hoererinnen-vom-kit/": {"label": "Auditors from KIT"}}}, "/en/studium/bewerbung/informationen-fuer-internationale-bewerberinnen/": {"label": "Information for international applicants"}}}, "/en/studium/preise-und-stipendien/": {"label": "Prizes and Scholarships"}, "/en/studium/wohnen-und-leben-in-karlsruhe/": {"label": "Living in Karlsruhe"}, "/en/studium/downloads/": {"label": "Downloads"}, "/en/studium/kontakt/": {"label": "Contact"}}}, "/en/vorlesungsverzeichnis/": {"label": "University Calendar"}, "/en/forschung-und-entwicklung/": {"label": "Research and Development", "children": {"/en/forschung-und-entwicklung/forschungsfoerderung/": {"label": "Research Funding"}, "/en/forschung-und-entwicklung/forschungsprojekte/": {"label": "Research Projects"}, "/en/forschung-und-entwicklung/promovieren/": {"label": "Doctorates"}, "/en/forschung-und-entwicklung/promotionsprojekte/": {"label": "Doctoral Projects"}, "/forschung-und-entwicklung/orc/": {"label": "Open Resource Center", "children": {"/forschung-und-entwicklung/orc/auda/": {"label": "AuDA"}}}}}, "/en/international/": {"label": "International", "children": {"/en/international/incomings": {"label": "Incomings", "children": {"/en/international/incomings/informationen-fuer-internationale-bewerberinnen/": {"label": "Information for international applicants"}, "/en/international/incomings/erasmus/": {"label": "Exchange Students (Erasmus+/Overseas)"}, "/en/international/incomings/praktische-informationen-fuer-internationale-studierende/": {"label": "Practical information for international students"}, "/en/international/incomings/freemover/": {"label": "Free Movers"}}}, "/en/international/outgoings/": {"label": "Outgoings", "children": {"/en/international/outgoings/wege-ins-ausland/": {"label": "Going abroad"}, "/en/international/outgoings/foerdermoeglichkeiten/": {"label": "Funding opportunities", "children": {"/en/international/outgoings/foerdermoeglichkeiten/erasmus/": {"label": "Erasmus+"}, "/en/international/outgoings/foerdermoeglichkeiten/promos/": {"label": "PROMOS"}, "/en/international/outgoings/foerdermoeglichkeiten/baden-wuerttemberg-stipendium/": {"label": "Baden-W\u00fcrttemberg Scholarship"}, "/en/international/outgoings/foerdermoeglichkeiten/freemover/": {"label": "Free movers"}}}, "/en/international/outgoings/partnerhochschulen/": {"label": "Partner universities", "children": {"/en/international/outgoings/partnerhochschulen/erasmus-partnerhochschulen/": {"label": "Erasmus partner universities"}, "/en/international/outgoings/partnerhochschulen/internationale-austauschprogramme/": {"label": "International exchange programs"}}}, "/en/international/outgoings/erfahrungsberichte": {"label": "Student Experiences"}}}, "/en/international/dokumente/": {"label": "Documents"}, "/en/international/strategie/": {"label": "Strategy"}}}, "/en/kontakt/": {"label": "Contact"}, "/en/service/": {"label": "Service", "children": {"/en/service/allgemeines/": {"label": "General Information"}, "/en/service/health-and-safety/": {"label": "Health and Safety"}, "/en/service/studieninteressierte/": {"label": "Prospective Students"}, "/en/service/studierende/": {"label": "Students"}, "/en/service/beschaeftigte/": {"label": "Employees"}, "/en/service/partner-und-alumni/": {"label": "Partners and Alumni"}}}, "/en/personen/": {"label": "People"}, "/en/suche/": {"label": "Search"}, "/en/studierenden-projekte/": {"label": "Student projects"}, "https://zkm.de/en": {"label": "ZKM"}, "/en/aktuelles/corona/": {"label": "Corona measures"}}